A “Mind” For My GoPiGo3?

The desire for more GoPiGo3 robot abilities pushed me to look for open-source componentized robot functions I could easily add to my GoPiGo3. The latest of which has been the “Robot Operating System” ROS 2 Kilted Kaiju for my robot Dave. The localization and navigation features offered by ROS 2 have proved not to be “drop in - it just works” components, and ROS 2 lacks a meta level robot existence component to utilize the localization and navigation features even if I could get them to function reliably.

Another goal for my robots has been to be self-contained, “autonomous” mobile entities, with persistent memories of the actions and environment the robot experienced, but “deeply understanding the robot environment” has remained an elusive (and processing expensive) feature/component.

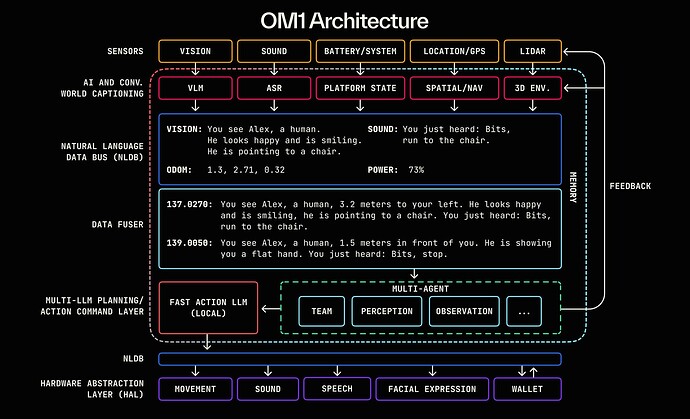

The OpenMind folks have released OM1 an “OS for Intelligent Machines” - oh now we’re talking. I want my GoPiGo3 robot to be “Intelligent”. This is an LLM (Large Language Model) that has been trained on how to interpret data from a variety of sensors, what are typical robot tasks and goals, and how to break those tasks and goals into commands for typical robot effectors.

While this “mind” will run on a Pi5 with 16GB of memory, the GoPiGo3 is designed to be controlled by a Pi2 through Pi4 generation, so an “Intelligent GoPiGo3” will best served with an off-board “mind” and a GoPiGo3 hardware abstraction layer.

This is the architecture diagram for the OM1 mind:

(I will not have time to look into giving Dave an “OpenMind”, because I accidentally updated Carl’s Buster Pi OS thinking I was updating my Trixie PiOS desktop Pi5. This broke Carl’s grammar based speech recognition. I am either going to have to solve the issue, or revert Carl to his last backup.)