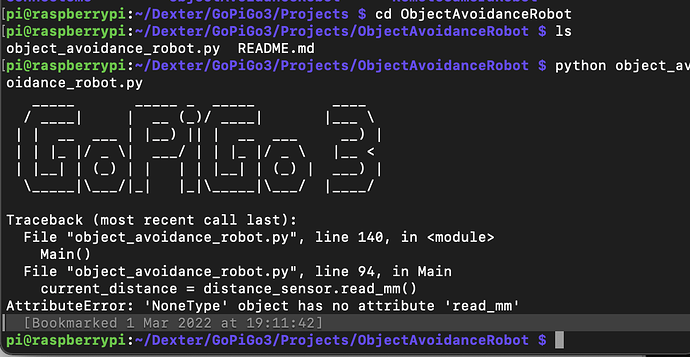

I’ve tried using the Obstacle Avoidance Robot from Dexter/Projects but when I run it it comes up with errors.

Not from us.

You can do what other students have done, and search the web.

There are a few threads where other students have had similar projects, so a search here might help too.

The errors you are encountering look like programming errors, or you are using Raspbian Bullseye.

You should use either GoPiGo OS or Legacy Raspbian.

You really need to learn to read the code before you try it, and learn to read the trace backs.

Unless your robot has the sensor the code is expecting, yes, you will see an error.

Start by understanding every line, every word of the small examples, and try to get the big picture of the GoPiGo3 EasyGoPiGo3 class. You will get nowhere until you understand That class, what it is, what it does, and how to use it.

You cannot adapt a program to your needs without studying that program first to understand it.

You cannot start designing before you understand the programming language, the robot API, and really understand how the examples are designed for data generation, data flow, data consumption/use, and code organization, execution, and decision points.

Quit looking for an easy answer.

I have filed “ObstacleAvoidanceRobot Does Not Fail Correctly For No Distance Sensor Case” to ask the error handling be improved when no I2C connected Time Of Flight Distance Sensor is installed on the GoPiGo3 attempting to run this program.

Perhaps a pull request is the appropriate way to get this solved?

Possibly, but this is such a trivial issue that I really only raised it to document it. The code works for the intended usage AND:

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE.

The name “object_avoidance_robot” is a slight misnomer. The program “avoids” hitting an obstacle by stopping forward travel when it sees an obstacle, but that “simplified avoidance” becomes immediately obvious if anyone reads the code before attempting to run it just based on the name of the file.

Obstacle Avoidance can be as simple as stopping to “Avoid Hitting An Obstacle” as the object_avoidance_robot.py script does with an I2C Time Of Flight Distance Sensor (not the Grove Ultrasonic Ranger), to intensely complex using heuristics such as the Intelligent Obstacle Avoider Robot which again uses the TimeOfFlight Distance Sensor mounted on a panning servo.

As far as “Complete Code For Obstacle Avoidance Using A Grove Ultrasonic Ranger On A GoPiGo3”, no - no one to my knowledge has posted an example of that.

I did make a “wander.py” program based on the obstacle_avoidance_robot.py, (saying again using the Dexter I2C Time Of Flight Distance sensor, not the Grove Ultrasonic Ranger), that implements an “avoid_obstacle()” function that simply turns 135 degrees when an obstacle is detected, (and wander.py also adds low battery protection to the robot.)

I doubt that is what you meant by “complete code”.

As @cyclicalobsessive has said, there are no easy answers.

There have been a few other students here trying to do similar things with Grove boards and/or GoPiGo3 robots, and it’s always been work. There’s no “silver bullet” and there’s no magic hat with a rabbit in it.

======================

Let me tell you about my project.

I found an example program called “remote camera robot” that used a mouse and/or touch-panel to drive the robot around with the camera providing a FPV of where the robot was headed.

I didn’t like the way the mouse control worked, so I had the brilliant idea of modifying it to use a joystick, (like the ones used in flight simulators), to control the robot. Since there was already mouse control, I figured it would be easy - just substitute the joystick for the mouse and I’m done - right?

Wrong!

It has taken me several years to get this project even close to completion because I was starting from the very bottom and working up. I had to learn everything: From the basics of Python to how to write controller code and interface to the robot’s libraries.

I went down many wrong paths, made tons of stupid mistakes, and discovered there’s more to robotics than just plopping a robot on the floor and waving a remote control at it.

I had to:

-

Learn what the robot could and could not do at the most basic level, and learn how to make the robot do these things.

-

Gain a basic understanding of what the robot libraries did and how they did it. Note that I still don’t have a really thorough understanding of those libraries, but I’m learning.

-

Learn what I have to do to make the robot do what it could do - not necessarily what I wanted it to do, but what it could do.

-

Learn that the original code that controlled the robot was not suitable, and I discovered that I would have to - in essence - completely re-write the controller code from top to bottom to make it talk to a joystick.

-

Develop the infrastructure to help me code effectively - a GitHub repository, how to use Visual Studio Code, how to do remote code execution on the robot, and so on. This itself was a non-trivial task.

-

Learn the ins-and-outs of the base operating system itself so that I could work with it instead of spending my time fighting with it.

-

Ultimately, I had to take the code from the original project apart - line by line - and try to figure out “what makes it tick” - learning Python along the way since I had very little experience programming in Python.

After doing all that, I had a still tougher, and more important, task to do: I had to decide what the ultimate design of the robot’s joystick control system would be. I had to decide what it could do, what it must do, and what I could postpone until later.

As I did this, I learned things about writing code to run in a browser context, how to interface that with a server running on the robot, all the stupidities and rules that applied, so on, and so on, and so on. I even had to learn how to use nginx as a reverse proxy and set up a secure, (https), browser context so the joystick would work.

My project is now about 80% complete - I can drive the robot around with the joystick - but I still do not have the desired control over the “head” pan-and-tilt, and I cannot provide a controller definition file so that other joysticks and gamepads can be used with the program.

Of course I had help. I spent hours, days, weeks, and months on-line trying to figure out how to make “this thing” work or discover why “that thing” isn’t possible. People here, (and other places on line too), generously offered their time and skill to help me understand what I had to do.

The bottom line is that there wasn’t a “plug-and-play” solution. Paraphrasing Winston Churchill, it took a lot of “blood, toil, tears, and sweat” to get where I am today - and there’s still a lot left to do.

You won’t find a simple, pre-compiled, solution that you can just drop in, write the report, and be done with it. It’s going to be work - a LOT of work, but it can also be fun it you let it.

We are ready to help in whatever way we can, but that’s all we can do - provide assistance and guidance. The lion’s share of the work will rest on your shoulders.

Paraphrasing Mission Impossible: Your mission, (if you choose to accept it), is to make the project a success. We can help, but it is ultimately up to you.

There’s one last thing:

Please don’t use the ultrasonic distance sensor, it will just make things difficult for you.

The folks at Dexter Industries originally designed the ORIGINAL GoPiGo, (not the GoPiGo-3) to use the ultrasonic distance sensor because it was less expensive, and they wanted to make the robot more affordable.

However, they rapidly discovered that the low price came with a cost: Accuracy left a lot to be desired - especially at distances - noises interfered with it, and it wasn’t the easiest thing to work with.

So, they abandoned the ultrasonic sensor in favor of a - more expensive, but much more accurate - time-of-flight sensor for their later robot models.

You would do well to do the same thing. It will save you a lot of time and grief.

I definitely agree I know that there aren’t any easy answers. I have learned that during my degree as well and will continue to do so in the future I will have to work hard for and accept the challenges and problem solving. That is coding at the end of the day and it is what I enjoy doing. I do apologise if I came across as wanting to find an easy solution.

I think that lack of confidence did play a role here as well. At the beginning of the project before signing a form to confirm that I was to do this project I thought that I would email the head of department in my university because I was really excited about it so I decided to tell him that this is the project that I wanted to do: ‘The Autonomous Car’ and I was really determined that I could do this project despite not programming in Python before because I was genuinely really interested in working with Raspberry Pi and the car itself. But the response that I received was that my project was too ‘overly ambitious’ and that I wouldn’t be able to do it. I’m not usually a sensitive person or take things like this personally but it did stick with me for a while. Despite being determined and eager it did make me doubt my own abilities sometimes.

Your project sounds really interesting! I like the idea of using a Joystick to control the robot. But yes I can see how that would take a long time to complete.

I have made mistakes with my project too, downloading the wrong OS was probably the main one. I downloaded Raspian for robots and got ‘firmware version error’ so I used Raspian Legacy instead. I definitely had a similar experience with my project as well at the beginning I had to learn Python, the different libraries, understand functions and how I could implement these in my project, I had to learn what OpenCV was and how I could use this as well. It was definitely a steep learning curve for me because I’ve never done anything like this before but i did really enjoy working with robotics and I think that I definitely will in the future it has just been really challenging for me.

That sounds really interesting with a server running on the robot. I’m learning about nginx at the moment as well in my course.

That’s great that your project is almost complete! I get that though, there is constantly a challenge or a problem to solve I guess is a way of looking at it.

No i definitely agree that there isn’t an easy solution, I have really enjoyed working on this project. I have definitely been stressed at times and doubted if I can actually achieve it (thanks head of department) but I wouldn’t change it.

I do really appreciate all the help that you and everyone have offered me thank you. But I know that I will try myself before asking unless I’m really struggling and I won’t ask for complete code or an easy solution.

Also I have successfully been able to implement obstacle detection for the autonomous car.

I had a meeting with my supervisor and he is really happy with the work that I have done. I did use the grove ultrasonic sensor and it’s working fine to be honest, I did see on some of the threads that some people have said it’s inconsistent but I’m not having any problems with it as far as I’m aware I’ve tested it quite a lot and it’s working fine.

I will definitely consider that in the future though for sure!

I do have until mid April to hand in my code and my report so I may try and implement traffic light detection as well. But I need to focus on my report as well.

Sounds like you’ve got the right idea.

The problem misunderstanding came from your title, as well as one or two other things you mentioned where it seemed like you were looking for an “easy out”. Not that I blame you - after banging my head for a few weeks, I start wondering if there isn’t a plug-and-play solution somewhere. Usually what I find is “plug-and-pray” instead of “plug-and-play”.

I am glad you got this beastie to work, great job - especially with the ultrasonic sensor!

I would caution you to concentrate on the requirements of the assignment and get that done, before you get distracted with enhancements. It’s better to get the one part done, and done well - including the report! - instead of trying to do many things and getting nothing done.

Let us know how it goes!

This is great news. While the ultrasonic sensor has some disadvantages, it has some REALLY BIG advantages, and I am very happy to see that you have made it work for you.

Advantages of Ultrasonic Sensor over TimeOfFlight Light Sensor:

- cost obviously - $4 vs $30 is why almost every car, on the road today, has ultrasonic sensors in the front and rear bumpers

- Not fooled by black objects - I have a robot which has both the Grove Ultrasonic and Dexter ToF (IR light) as well as a 360 degree view LIDAR sensor. If that bot relied only on the ToF sensor and/or LIDAR, it would run into my black filing cabinet, my black trashcan, my black UPS, and my black chair.

There are some disadvantages of the Ultrasonic sensor (in a home or educational robot):

- detection range may vary greatly for cloth covered obstacles

- some humans hear the sensor emissions or annoying harmonics

- slower sample rate than ToF sensors (limited by the physics and mechanics)

BTW, one of the very first RaspberryPi based autonomous car demonstrations I saw years back, was from Carnegie Mellon University doing lane following, stop light detection, and obstacle detection. That car used an ultrasonic ranger for obstacle detection.