I saw a neat video of a robot finding and lining up with its dock using three large circles and OpenCV

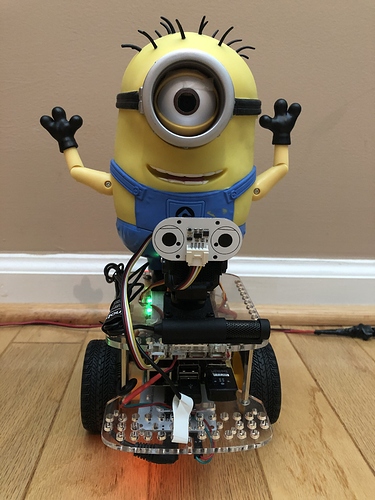

So I thought I’d try that with Carl. Now Carl can already line up +/- 2 inches of the docking centerline, but that kind of precision does not make for reliable docking. Carl needs +/- 0.5 inch alignment to the docking centerline, I think.

I created the appropriate sign for Carl to use, with two 3" circles on 4" centers, and proceeded to investigate what Carl “sees” when he is lined up and when he is 4" off center.

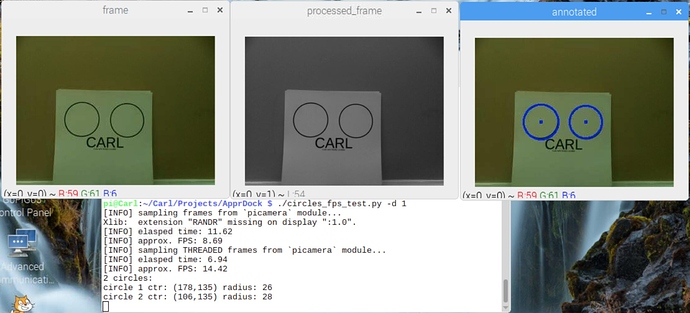

Here is what he sees when 16" from the dock:

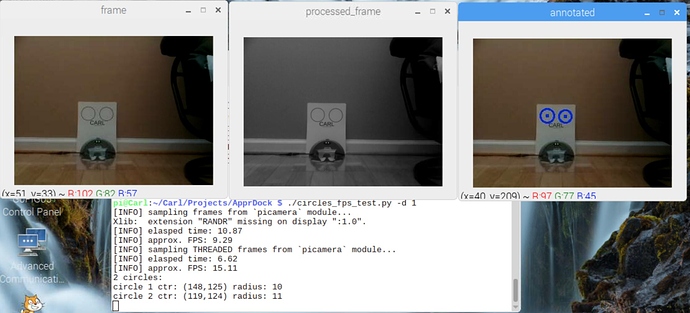

And here is what he sees when 40" from the dock:

The circles radius changes by about 17 pixels in 24 inches or 0.71 px/inch when using 320x240 resolution video from the picamera.

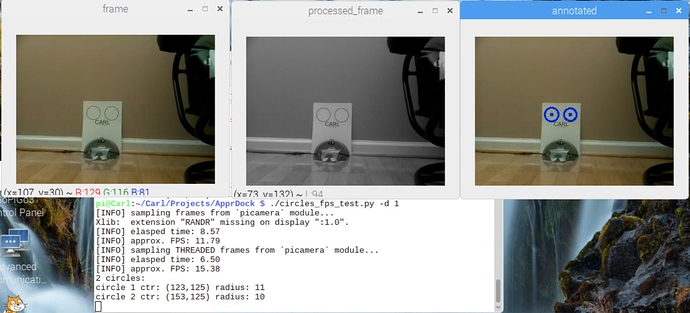

Here is the “experimental result” from 4" to the right of center at 40 inches:

Circles lined up are left: 11 right: 10 pixels radius,

and circles when 4" to the right of lined up are left: 11 right: 10 pixels radius.

How come the same?

Now I need to determine what is the difference in distance to the right circle versus to the left circle when Carl is not on the centerline. If Carl is only 2" off center when 16 inches from the dock, the difference in distance to the right versus to the left circle is 0.37 inches.

Uh oh, I’m starting to see the problem here. Difference 0.37 inches * 0.71 pixels per inch = 0.26 pixels. Even if I bump up the resolution to 640x480, I get only one half pixel. This is for 2 inches off center and up close.

The difference in hypotenuse distance when 2 inches off center at 40 inches away is only 0.15 inches which is 0.1 px at 320x240 or 0.2 px at 640x480.

No way I’m going to be able to navigate to +/- 0.5 inches of the centerline using the circles.

Looks like Carl’s one eye is just not as good as I was hoping for.

p.s. The bot in the video has extensive physical tolerance for the final docking, as well as a middle-forward-extended-circle, and a floor crack in the center that might have been better than the circles.

)

)