With Create3-Wali, I was hoping to get sufficient pose estimates without LIDAR by using vision+depth. Since Dave already has encoders and a LIDAR, I am going to be able to test several combinations to find “sufficient pose estimation” with minimal power and processor load.

My goal has always been to wander searching for “unknown” visual objects, then identify/classify these objects using Internet search and/or human dialog and extend Dave’s “known object” recognition blob.

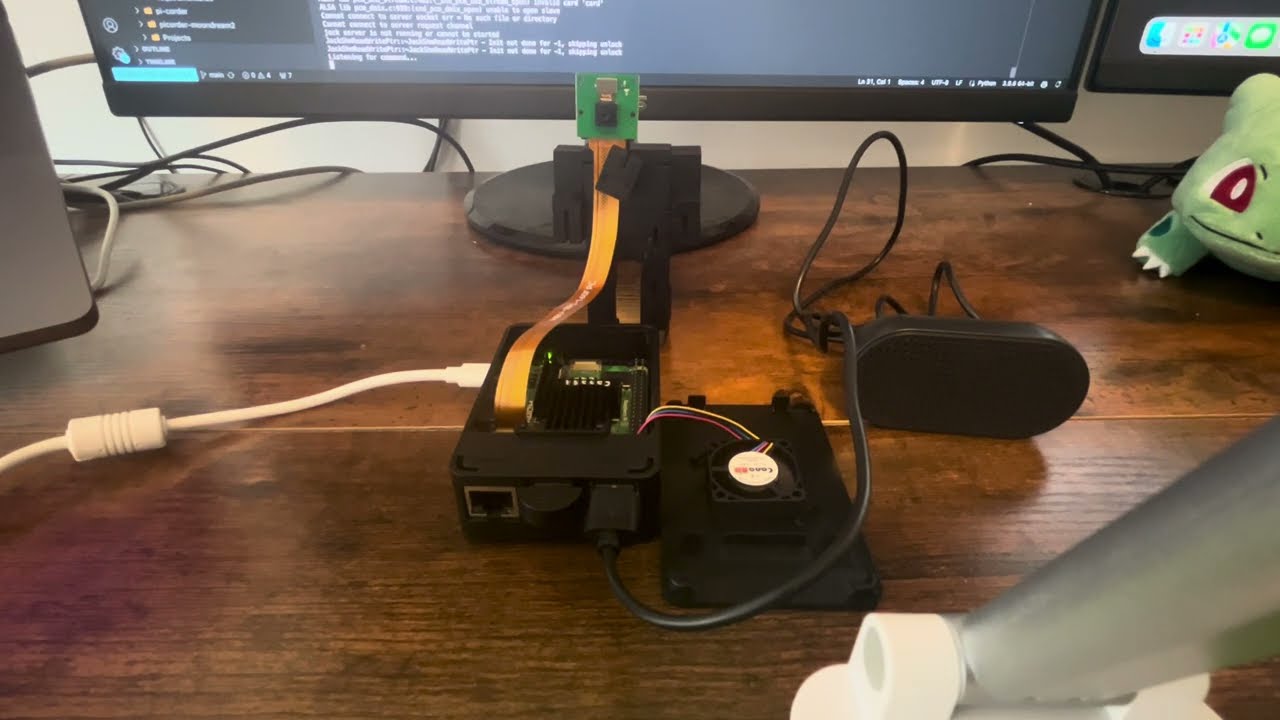

There is an interesting, totally autonomous Pi5 visual object dialog demonstration (on GitHub) called Pi-CARD with similarity to what I envision. Pi-CARD supposedly can snap a frame with a PiCam and use the frame as input to its LLM to dialog about the objects in the frame. This video demonstrates the speech recognition and Large Language Model Dialog capabilities running on a Raspberry Pi 5: