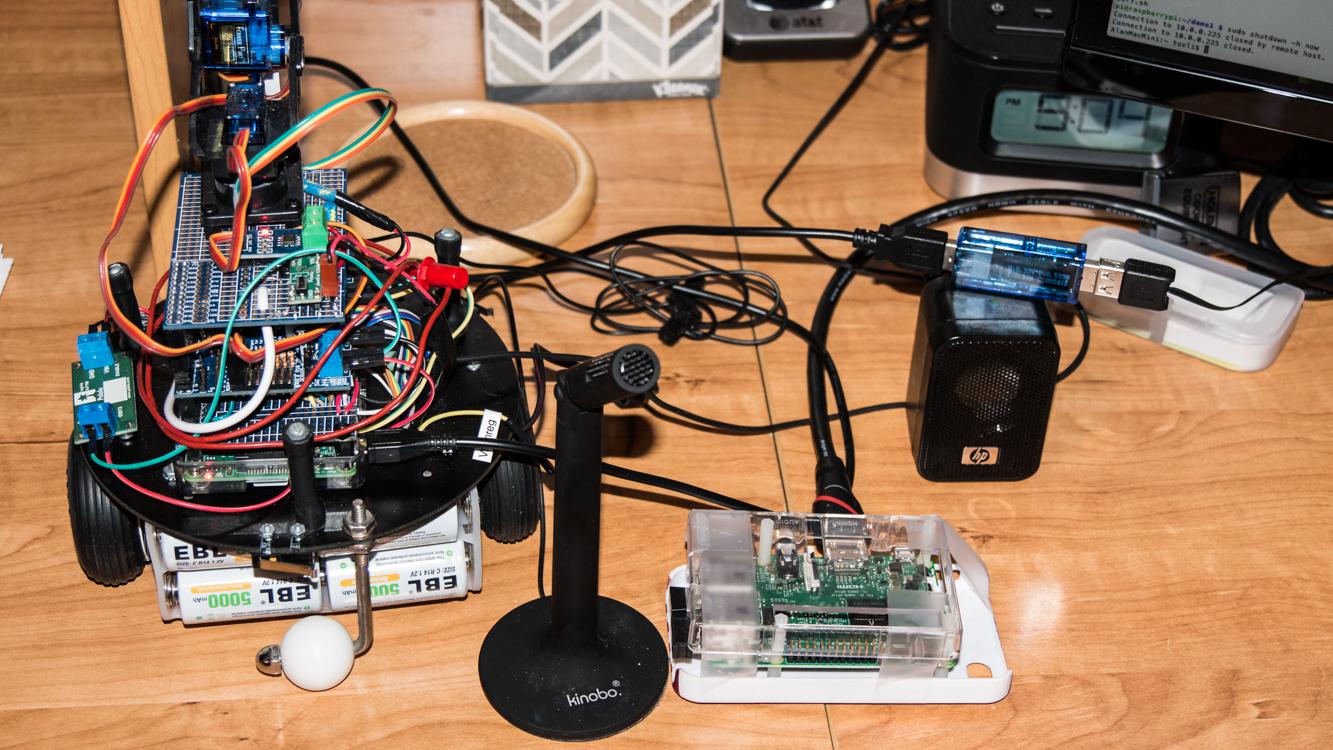

I started my career (40 yrs ago) not even wanting an OS - terrible NIH syndrome. Today, it is totally inefficient to ask even a 4 core 1.4GHz 1GB (split) memory “brain” to perform every feature I want in my bot, but I would like to maximize the local capabilities and then layer some cloud capabilities as non-required, enhanced abilities.

Integrating speech recognition (one of my career specialties), and using visual object recognition to enable self managed learning and self battery charge maintenance are my robot bucket list.

I’ve done some pocketSphinx testing, and need to learn to used OpenCV.

My plan for OpenCV starts with implementing one of the simple Braitenburg vehicles using evaluation of left and right average intensity of periodic images.

Later, I want to integrate some form of assisted learning of “home objects useful to a home robot” (collect periodic images with situational information while wandering, “notice” similar objects in the collected images, ask human for identification, expanding an RDF object DB). Perhaps the object recognition load needs to be split between local and cloud.

I feel that Carl needs a minimal level of intrinsic speech recognition, so I’m not rushing into Google Voice or Alexa. It will be a challenge to design a good man-machine speech interface for Carl under the heat and computational limits.

I’m hoping to use sound level measurement, periodic and sound triggered image interpretation to trigger running the speech recognition engine for short dialog sessions, then returning to low power consumption mode.

The Raspberry Pi 3B/B+ can do a pretty good job of limited speech reco, and non-real-time visual object recognition if allowed to focus most of its resources on one major task at a time. The Dave/Egret model will need to be Carl’s operational and interface modes.

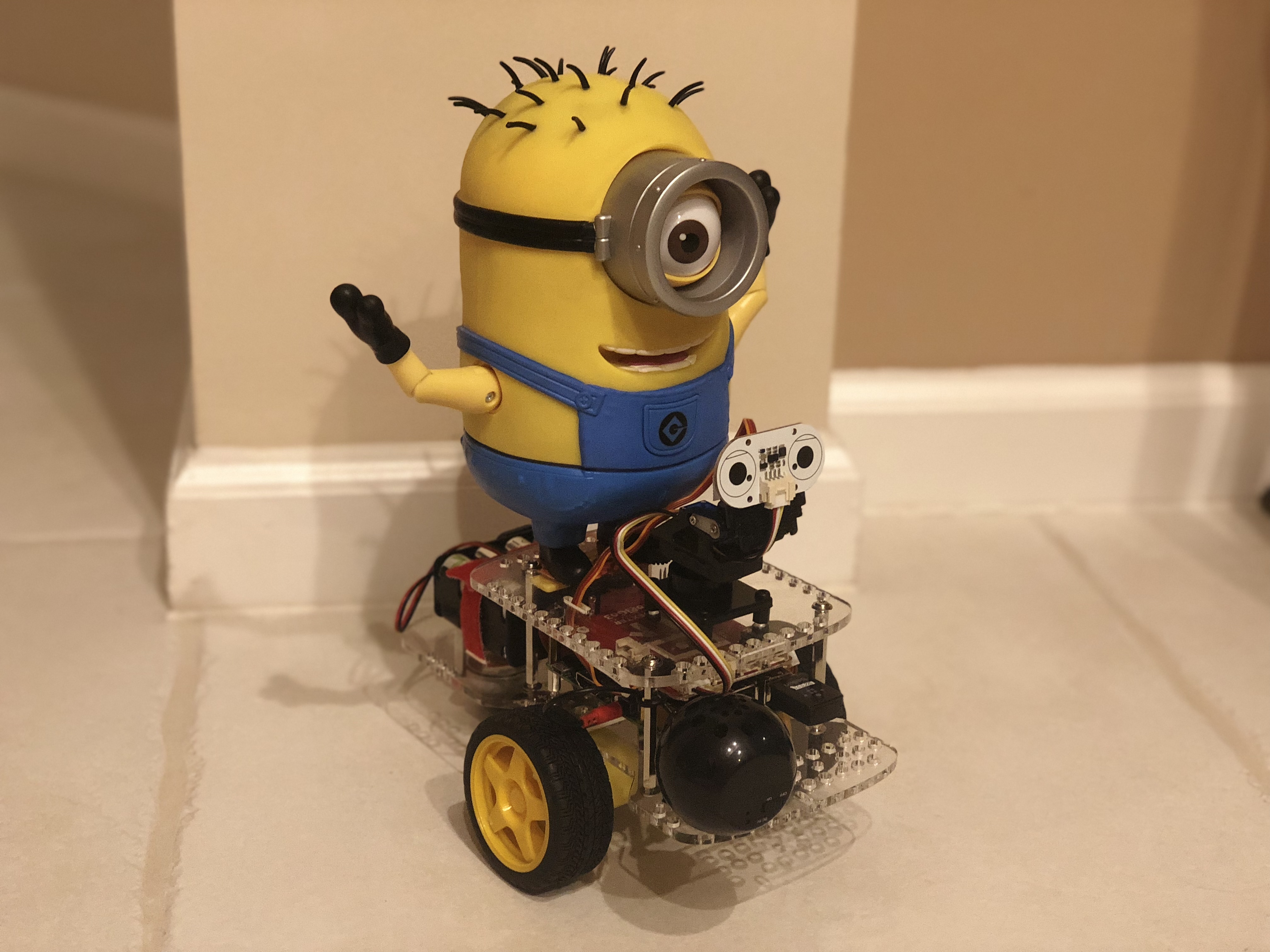

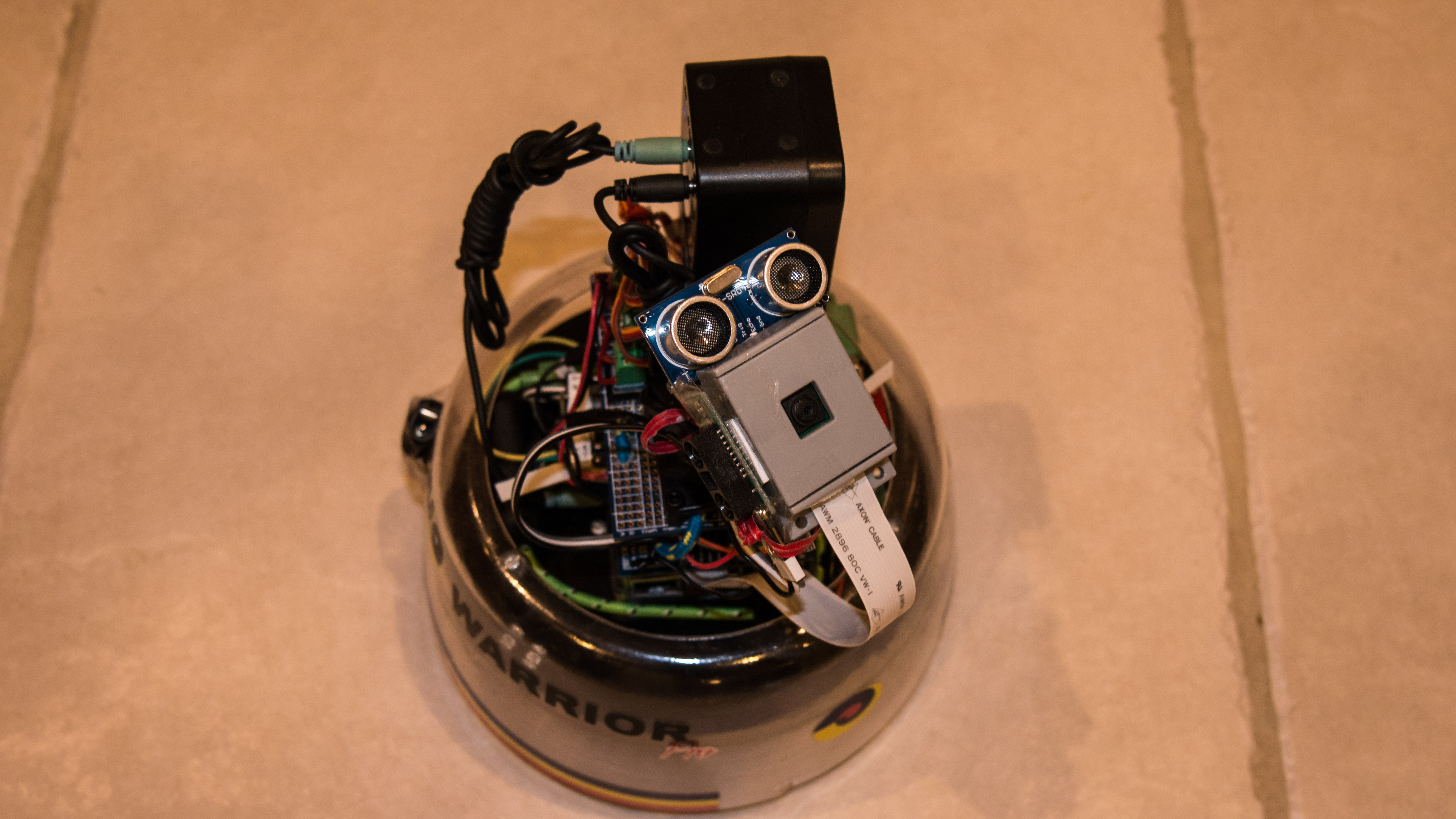

I had high hopes for the brain transplant into the RugWarriorPro, but it ended up being three years of fiddling with hardware problems. I think building over the GoPiGo3 will allow me to get started on my robot bucket list.

And the immediate need is to familiarlze myself with the distance sensor and tilt/pan servos.

My first distance sensor experiment is going to be

- recognize a wall (three distance measurements in a line),

- approach wall to “wall following distance”

- Look left and right for a “corner”,

– if corner found, move into it, and orient looking out on the dissecting angle

– if no corner, wall follow [left | right | continue] , looking for corner

- [turn left or right to parallel]

- measure safe movement distance,

- point sensor 90 deg to body to do the “follow” movement for safe distance

- point sensor parallel again and look for corner