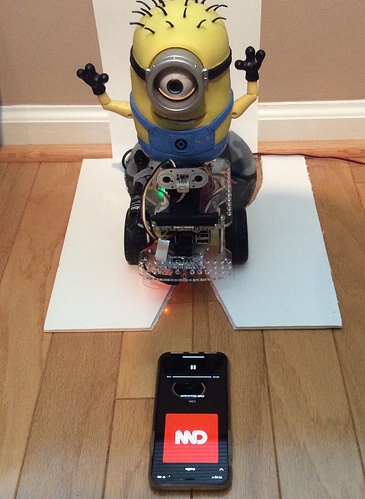

Carl is over two years old now and is still waiting for me to engage him in conversations. I set a goal that 2021 will be year Carl learns to listen for commands. If he learns to behave, I’ll consider teaching him more general conversational dialog.

Years back I experimented with the CMU Sphinx / PocketSphinx research engine for speech recognition. PocketSphinx did everything - hotword, grammar, and language model forms of speech recognition, but it was “old” technology considered brute-force by today’s standards.

While I was experimenting with PocketSphinx, the research community was experimenting with “neural nets” for language model speech recognition, which is needed for input into NLU natural language understanding dialog engines.

Today Carl has his choice of DeepSpeech, Kaldi, and Vosk for off-line speech recognition using machine learning / deep learning. (Carl has a Google Cloud API account, but I don’t let him loose with the credit-card very often.) There may be other players, but I only have so much patience to search.

DeepSpeech will run on Carl’s Pi3B, but is primarily focused on big platforms with GPU Graphics Processing Unit to process the connection networks. Carl was not impressed with the processor load and much slower than real-time recognition of DeepSpeech on his RPi.

Vosk is an open-source connection-net powered research engine that heralds from the Kaldi research engine. The engine was created by and supported by a Russian reco guru - Nickolay V. Shmyrev who helped me five years ago with PocketSphinx and is still willing to engage on his “obsession.”

Vosk only has two forms of recognition - language-model or list-of-words. list-of-words constrains the recognition vocabulary, but does not enforce any syntax (no grammars). Either of these two forms use approximately 40% of one core of Carl’s Pi3B even sitting idle, and eat an extra 75 mA per hour, which results in a loss of about 1.5 hours of "playtime, so I wouldn’t want to use it 24/7 when not conversing with Carl.

Now, in 2021, some folks in the neural net for speech recognition are turning their attention to using connection networks for hot-word detection. PyTorch, PicoVoice Porcupine, Nyuyama are three engines, I found.

With PyTorch I would have to become a speech and deep learning guru, then train my own hot-word model on my Mac. That was interesting reading but not going to happen.

I tested the PicoVoice Porcupine engine first, and was extremely impressed with the low (10% of one core) processing load, phenomenal recognition of far-field voice and even whispers, and near zero false recognitions. PicoVoice will not even accept email from non-commercial domains, (google, yahoo, hotmail), and will not create a custom hot-word model for Carl. I would have to say “Porcupine”, or “Computer” to get Carl to begin listening for commands. Carl insists that I say his name if I want his attention.

Carl Listening To CNN for “Marvin”, “Shiela”, “FireFox” or “Alexa”:

I discovered another connection-net based hot-word engine - Nyumaya out of Austria, the brainchild of a smiling fellow by the name of Neumair Günther. This engine requires the same barely-noticeable 10% of one core load, and exhibits great recognition of the hot-words (“Marvin”, “Sheila”, “FireFox”, or “Alexa”) with either normal speaking voice or whispers. It only had 3 false recognitions during an hour of Carl listening to the CNN audio news feed, and still recognized my normal voice hot-word utterance at the same volume as the background audio news feed.

Neumair Günther responded to my inquiry, about a custom model for Carl, with “Of course carl will be trained.” I was so excited I awoke at 5:45am this morning to create a tool to record samples of speaking “carl”, “carl listen”, and “hey carl”, (at a more reasonable hour for my wife and me to “play with Carl”).

So it looks like Carl will be able to use Nyumaya hot-word engine to wake-up when I address him, and the Vosk engine for recognizing what I want from him. Of course recognizing what I want and him knowing how to do my bidding will have to come from my fingers, hopefully at more reasonable hours!