I am a neophyte to the whole subject of localization using inertial measurements (3 accelerometers, 3 gyros, [and 3 magnetometers] ). For years I have seen books, papers, and courses at the university level, and how hard the problem is, to the result I have generally felt the whole topic to be beyond me. Additionally, since all my prior robots have been unable to use heading, location or “known objects” to any advantage, I have not been inclined to invest in the hardware to learn what can and cannot be accomplished by a “non-research” robot.

Obviously, one way to start would be the [u]DI IMU[/u].

The DI IMU has 9DOF measurement, with 16bit gyros, 14bit accelerometers, ?-bit magnetometers, and includes a 100Hz “IMU fusion processor” which I would hope could make it easy to define a [0x, 0y, 0z, 0heading], drive a little, stop and then obtain the latest [x,y,z,heading] “pose.” (It seems to be much more capable than that, but that is the level of my thinking at this point.)

But even before that, I would need to purchase some IMU, and I don’t know how to compare the DI IMU with other apparently similar units, such as the [u]Seeedstudio Grove IMU 9DOV v2.0[/u] available at slightly less than half the price of the DI IMU.

The Seeedstudio unit is based on the MPU-9150 chip which purports to have 16-bit Accelerometers, 16-bit gyros, and 13-bit mags, claims to have a “fusion processor” and claims higher max sample rates (filtered 256Hz). I can’t tell if all the “fusion processor” is actually doing is handling a configurable FIFO queue of individual sensor readings, or is also performing some advanced calculations or filtering.

The DI IMU would appear to have an “it’s our product” support advantage.

Are there other advantages of the DI IMU?

Other DI IMU Questions:

- I cannot figure out from the [u]sample program[/u] for the DI IMU sensor how to program a “define zero, drive some, print pose”, and the more interesting: “define zero, drive some, display an [x,y] vs time map.” Any hints where to start?

- Don’t the motors/encoders make magnetometer readings worthless?

- To get path data, I should use the BNO055 IMU Operating mode and fetch Fusion data, correct?

and what is “Fusion data”? - Are the quaternion and Euler angles the “fusion data”?

- Is the fusion simply averaging between the sensors, or something better?

- Can you point me to something to learn how to convert “fusion data” to [x, y, headingXY]

And perhaps the most important question: Is localization with any IMU, indeed, too complex for a home robot?

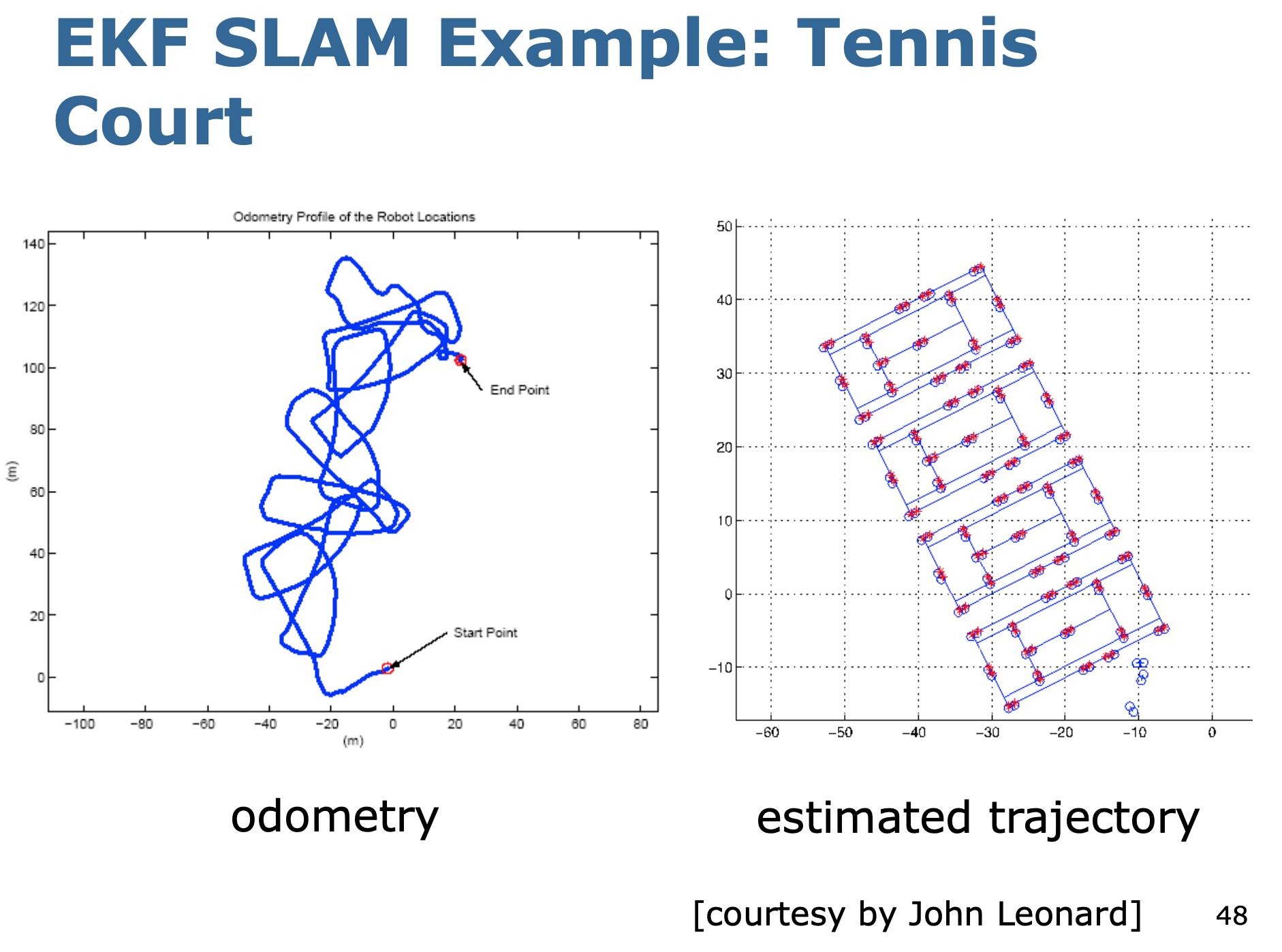

I found this example from a [u]university discussion on SLAM[/u] that makes me think this is hopeless. I can clearly see the math is beyond me, and it looks like the raw data out of the IMU is no where near useful without my robot having a Ph.D in Math: