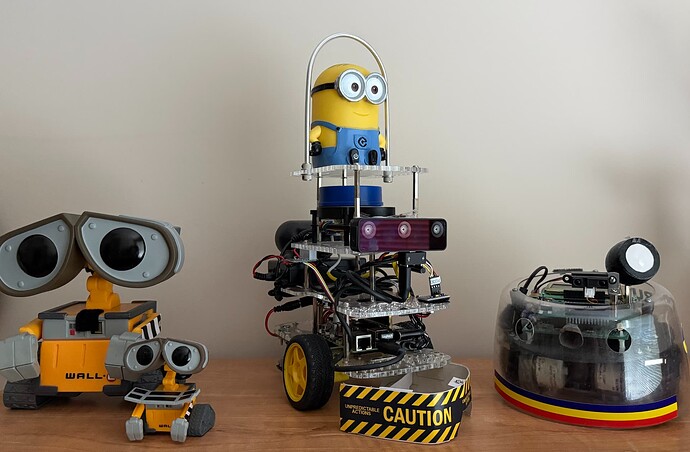

After two years of off and on attempts to teach my Raspberry Pi powered robot (and myself) to use the nav2 stack, finally…today ROS2 Humble robot GoPi5Go-Dave navigated (using a whole house map he previously created) to the next room and back to be in front of his dock.

I first attempted to learn slam_toolbox and nav2 totally on my own, then unsuccessfully tried adapting the TurtleBot4 code. Next I successfully adapted the TurtleBot3 waffle model, TurtleBot3_Cartographer, and TurtleBot3_Gazebo packages for my robot, so I thought it would be a piece of cake to convert the TurtleBot3_navigation2 package for my robot.

Now, navigation needs base_footprint in the URDF, which my robot never needed before so no problem, just add the link, and joint and we’ll be navigating in no time, right?

Well, I had chosen to have my base_link at origin 0,0,0, and the base_footprint at origin 0,0,0, which for some reason made the base_footprint magically get optimized out of existence, or something. I kept getting “No transform from odom to base_footprint”.

Solution: Move base_link up to the center of the wheels, return the base_footprint to the floor with a negative wheel radius, and update all the joints for the new base_link not on the floor - (joints: camera, imu, wheels, lidar, each platform, and the Minion Dave character)

After “only make one change at a time” to the URDF, kill and restart the joint and robot state publishers, and check the robot model in rviz2 a few million times, the URDF was ready.

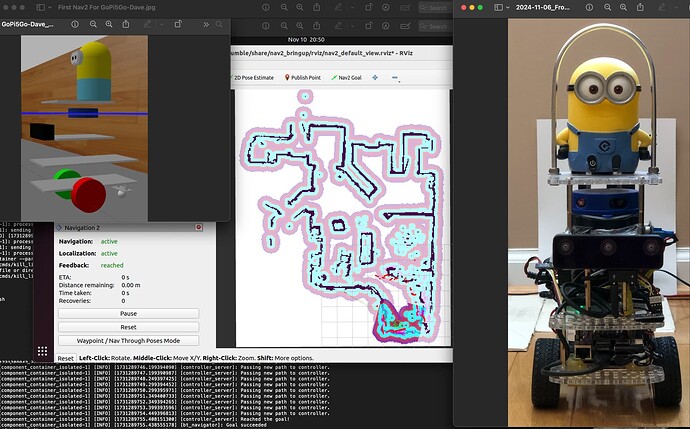

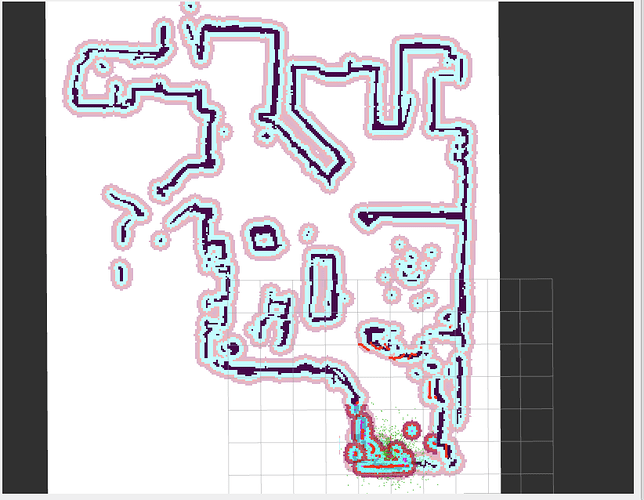

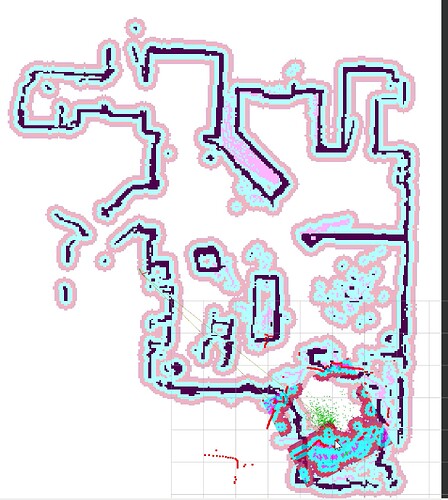

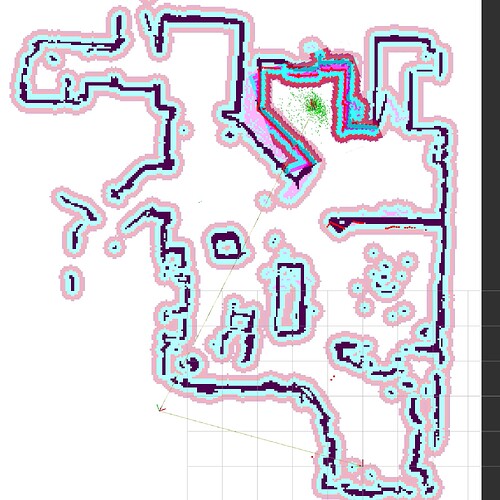

I fired up the LIDAR, fired up my “GoPiGo3 Robot Nav2” package on the bot, and launched rviz2 on my desktop. We’ve got the map, so give it an initial pose estimate. We’ve got occupancy grid dots appearing, and look at that - cost map is displayed.

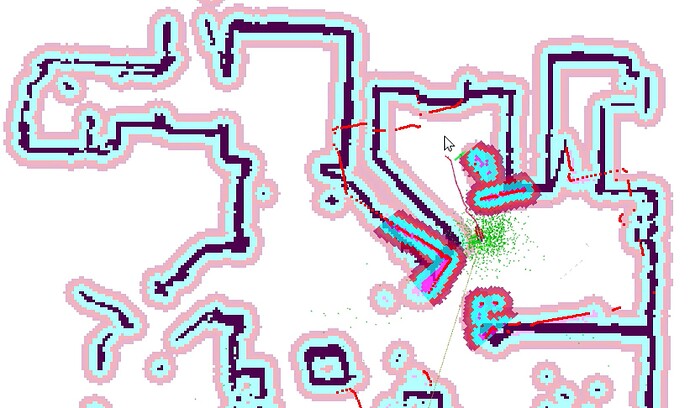

Time for the test - give it a navigation goal a meter forward and pray! Yo! It navigated.

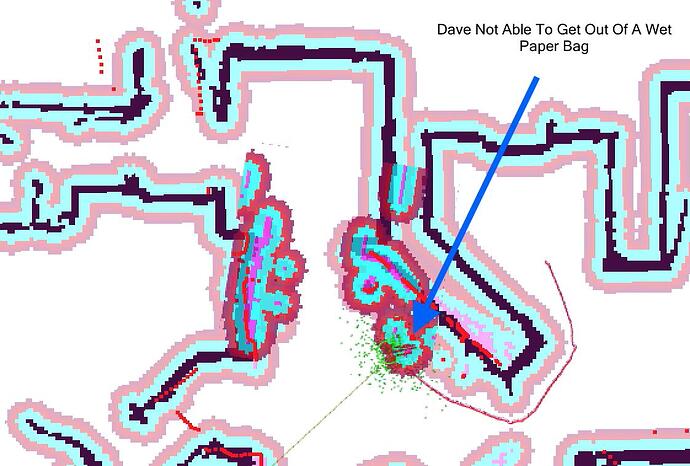

I got brave and gave it a goal in the next room, that would need finding both a local and global path, and non-mapped obstacle avoidance. I nearly pee’d my pants with excitement. (At age 72 that is more of a concern than it used to be.) GoPi5Go-Dave arc’d out the non-orthogonal doorway of his home-office, around a corner, avoided the sofa, avoided the dining room chair, avoided the table, and arrived at the non-orthogonal next room boundary of the goal. Almost at the goal, he paused with a bout of indecisiveness at a very complex wall section. I canceled the goal and gave him a moment to rest, as I returned to my desk.

As he announced “Battery at 10.3v Need To Prepare For Docking”, I issued a new goal for him to return to his docking ready pose. He successfully navigated back “home” and with a slight nudge from my foot, he was in the proper position to dock as he announced “Battery at 10.1v Docking Now”.