Continuing the discussion from Finally, GoPi5Go-Dave Navigated Away From Home:

==================================

I don’t have “stereo depth perception”, yet I can navigate.

================================

There’s the argument that “We got to the moon using computers less powerful than a HP programmable calculator” with the implication that this kind of stuff is easy.

The reality of the space program is that we didn’t get to the moon using computers “less powerful than a HP programmable calculator”. We got to the moon using a custom computing cluster infinitely more powerful than even the supercomputers of today - a network of human minds providing real-time analysis and control - both in space and on the ground. The “computers” of the day were - essentially - the equivalent of programmable calculators that did the data pre-processing for the computing cluster of human minds.

AI is interesting. However the most powerful and real-time adaptable computer is still the human mind.

=============================

However, (seemingly in contradiction of the above), I’m wondering if we’re not seeing the forest for the trees.

I had a friend who wrote a software plotting package[1] that could create, draw, and rotate a 3-dimensional wire-frame model with a point of view that could move and rotate anywhere within the field of view of that object. More important, he did this with an Atari 8-bit computer that had limited precision trig, 64K of RAM, and was slow as molasses in February. (8 MHz clock)

He did this by reducing the complex trig to simplified integer arithmetic in a way I don’t remember nor did I understand it then, but I do remember it was absolute genius.

I’m not claiming to be a genius, but this makes me wonder:

Are we missing something? Are we trying to do too much with the limited resources we have? Is it possible to simplify the model in such a way that, though it might not be able to do incredible mapping and such-like, it appears to be able to do the incredible mapping?

Super 3D plotter took advantage of the fact that the Atari display, even in its highest resolution modes, had a limited resolution that was less precise than the mathematics. As a result, he could simplify the mathematics to match the limited resolution of the display and use that to produce the effect of higher resolution mathematics.

Can we do the same thing with the GoPiGo-3? Can we use the processing capabilities of the human mind to “simplify” the process? Instead of trying to duplicate the processes used by the human mind, can we simplify the process so that we get the same effect - even if not so precise or technically clever and accurate?

What say ye?

=======================

-

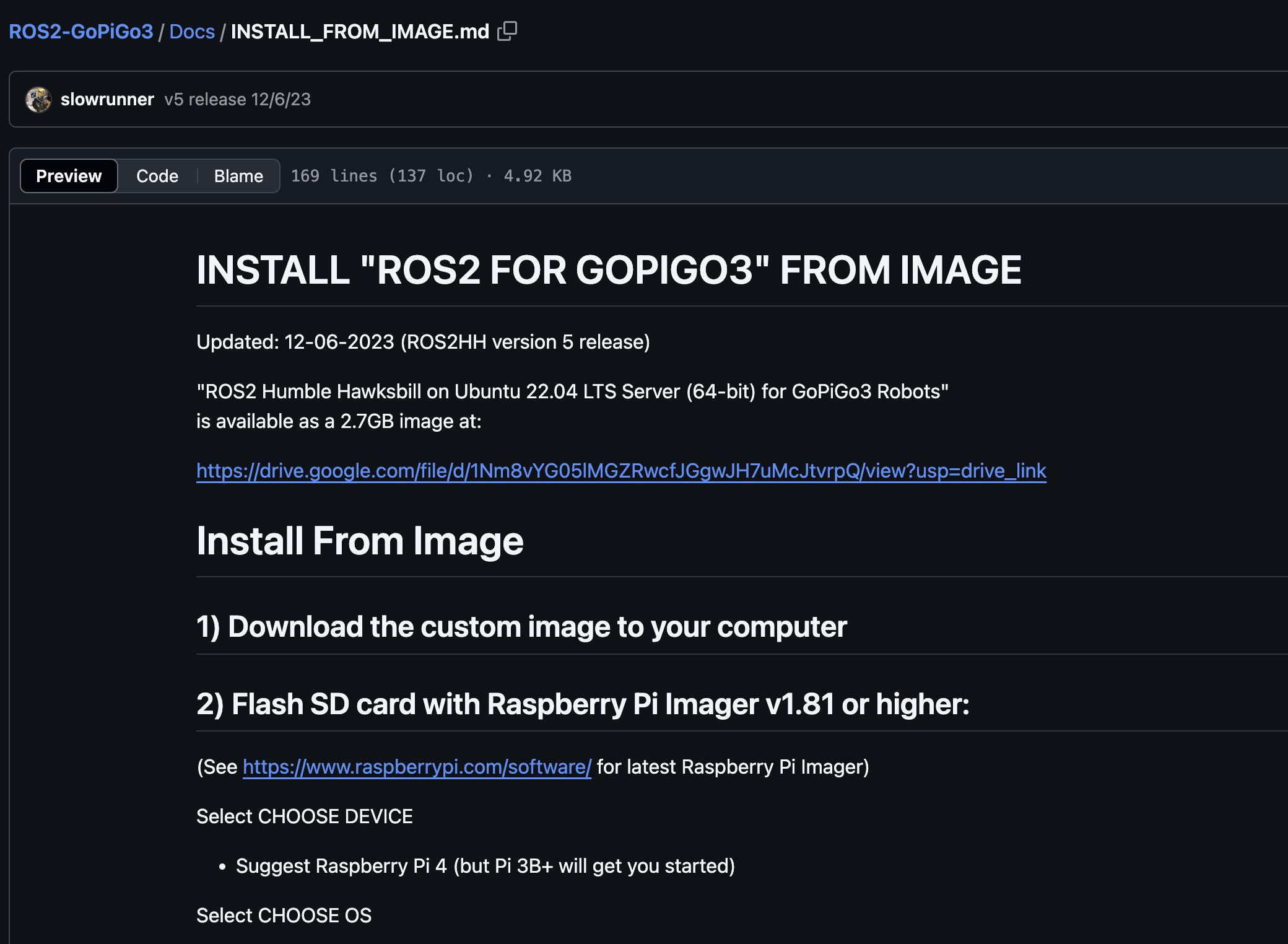

It was called “Super 3D Plotter”. It has since been released to the 8-bit Atari “Pool Disk” CD, (an archive of Atari 8-bit software from that era), and you might be able to find it on-line if you look for it[2].

-

Atari 400 800 XL XE Super 3D Plotter II : scans, dump, download, screenshots, ads, videos, catalog, instructions, roms

Pool Disk Too (Disc 2) : Bit Byter User Club : Free Download, Borrow, and Streaming : Internet Archive