“Integrating with the remote PC”

If you copy over the ydlidar files from the book (as suggested on P290), then the git clone steps on P291 won’t work (you’ll get an error because there’s already a folder named ydlidar). In order to have the latest files I removed the book copy and did the git clone step. May not have been the best move as we’ll see…

For some reason in “Running the YDLIDAR ROS Package” I did not see “/tf” when I ran rqt_graph. But I did confirm (with “rostopic list”) that the /tf topic was being published (and could “rostopic echo” just fine).

if you use the “git clone” approach, then you won’t immediately be able to do the next step - there is no display_scan.launch file. There seem to be 3 files in the book’s files that aren’t in the git version of the ydlidar folder:

- src/scan.py

- launch/display_scan.launch

- launch/gopigo3_ydlidar.launch

You’ll want to copy them over. You won’t actually need to catkin_make again, but doesn’t hurt to be sure.

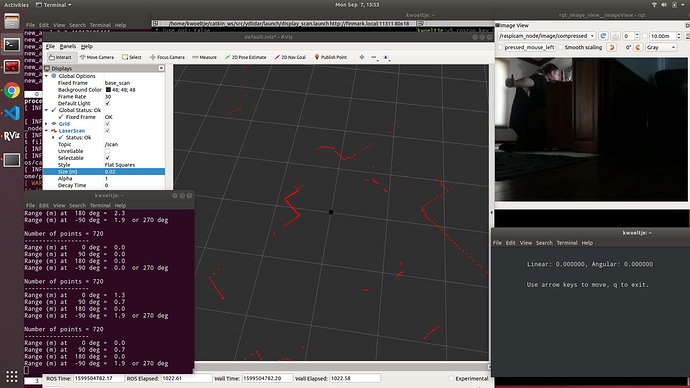

When I launched display_scan.launch I did not see any scan, and had an error stating "Fixed Frame [map] does not exist. Turns out this was easy to fix (but took a few minutes of panicked googling):

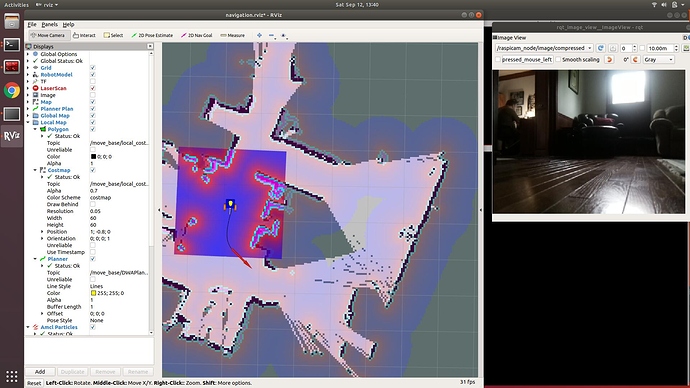

- Under “Global Options” click on “map” next to “Fixed Frame”; choose “laser_frame” from the drop down

- Towards the bottom of the window, click the “Add” button

- About 2/3 down on the list that pops up (in the “By display type” tab) you should see “LaserScan”; pick that and click the “OK” button

- Click on the small triangle to show the options under “LaserScan”

- Click the empty column to the right of “Topic” and then select “/scan” from the dropdown.

You should now see the lidar point cloud. If you increase the “decay time” for the display (e.g. to 2), you’ll have better definition, but delayed reaction to movement.

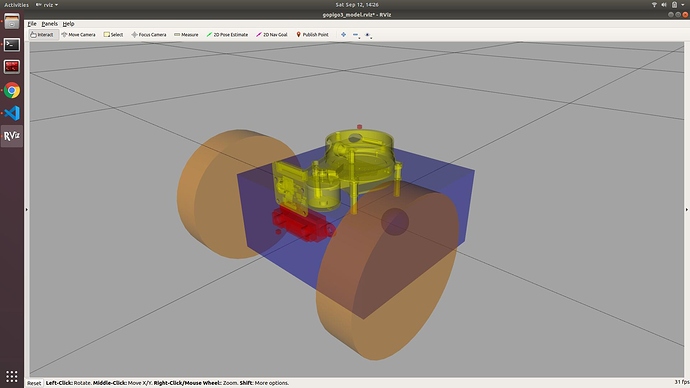

Oh - also note you’ll have to add the robot model manually as well if you want that.

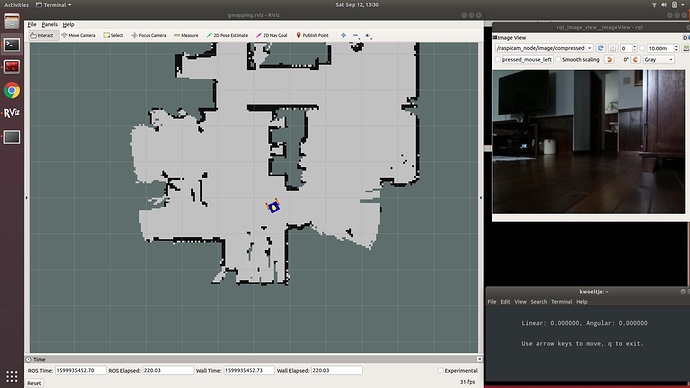

OK - next steps - “Integrating with Raspberry Pi”

/K