Is there way to have ROS installed on GopiGO ?

and if there is way, would be using Raspbian for Robots or dexteros?

Is there way to have ROS installed on GopiGO ?

and if there is way, would be using Raspbian for Robots or dexteros?

Hi m-alarifi. You would be using Raspbian for Robots. Check out this link in the ROS wiki: http://wiki.ros.org/ROSberryPi/Setting%20up%20ROS%20on%20RaspberryPi

I assume you know which version you want installed. In case your wondering Raspbian for Robots is the same thing as Raspbian. All Dexter industries did is change a few things, like pre-installing the GoPiGo software on it. Just in case you don’t know how to do that here’s the tutorial for that:

https://www.dexterindustries.com/howto/install-raspbian-for-robots-image-on-an-sd-card/

This article makes it look easy enough:

I presently have a GoPiGo3 and an RPLidar A1M8 and am considering installing ROS on the R4R OS to be able to use the RPLidar to map a room with the GoPiGo3.

I realize that there are already instructions on the web for setting up a Lidar on the GoPiGo3 chassis, but I rather not run Linux on my Laptop.

I have looked at the “Starting with ROS on the GoPiGo3 and the Raspberry Pi” article. There seems to be some inconsistencies between the instructions in the article and the present version of R4R. For instance the ROS instructions say:

sudo curl -kL dexterindustries.com/update_gopigo3 | bash

This should create a directory ~/Dexter/GoPiGo3

However there is already a ~/Dexter/GoPiGo3 directory in the present version of R4R so why create a directory that already exists?

This concerns me very much as to the accuracy of these installation instructions.

Comments?

Regards,

Tom C

Hi Tom,

The R4R image is not updated as often as the /Dexter/ folders, so that command will bring your Dexter software up to date.

Also make sure your GoPiGo3 firmware is version 1.0.0 (not changed in a while, so probably is already). Either use the R4R desktop GUI app “GoPiGo3 Control Panel” or “Test and Troubleshoot” app “Troubleshoot GoPiGo3” button (and look at the log.txt on the desktop).

Special request: would you start a “Tom’s GoPiGo3 ROS Adventure” thread with a picture and description of your bot at this point, (and anything about yourself you want to share).

I try to stay “ROS aware”, but devote most of my energy on learning the capabilities and limitations of the GoPiGo3, RPi3, TOF-Distance Sensor, Wheel Encoders, PiCamera, 8cell NiMH Battery, and SmartCharger, with a focus on the technology and algorithms needed for my bot to find and reliably use its recharge dock.

I’ve got a few extra sd cards, and have contemplated building a ROS on GoPiGo3 card so I could understand what ROS could do for me (without adding a LIDAR sensor), but I keep thinking it will be interesting but actually distract from finishing this OpenCV “reliably return to the dock” task.

Thanks for the response and words of advice, much appreciated.

Do you remember the Heathkit Hero 2000? It was programmed in BASIC and could actually return to its Base to recharge.

I am planning on using the GoPiGo3 as a test bed to setup and learn about SLAM for bigger sized robots.

I will definitely document my SLAM experiments with the GoPiGo3 as a separate thread. Like you I have a number of large capacity SD Cards so I can experiment with different software configurations.

I am also experimenting with Donkey Car and have a Rpi 3B+ setup with the latest Donkey Car software and interfaced to a Parallax Eddie/Arlo robot chassis. Donkey Car uses a Pi Camera and Open CV to navigate a course visually, but I feel that RPLidar is the best implementation for SLAM.

Regards,

Tom C

Surely do remember it. Here is my “baby brother” Hero posing for a photo around 1987:

[u]This is the latest blog post and video for my GoPiGo3 bot, Carl.[/u]

Very excited to see you on the GoPiGo3 forum.

I have a Hero retrofit kit to add the hardware for an autonomous return to its charger function if you know anyone who needs one.

I hope that I can be a real help on this forum helping to integrate ROS/RPLidar into the GoPiGO OS in order to experiment with SLAM.

Regards,

Tom C

LIDAR seems to be the de rigueur on nearly every ROS bot paper I have read.

Does your bot also have an IMU? How much value do you think an IMU can be for localization?

I am hoping that eventually my bot will be smart enough to use the picamera + encoders + “learned visual objects” (lines in the floor, free wall baseboard lengths, wall outlets, furniture, doorways, windows, indoor light fields, …) for localization. It seems some folks are going there: (“Toward Consistent Visual-Inertial Navigation”)

No, not yet. Though I have built a number of autonomous rc cars that used the 3DR AMP (Auto Pilot Mega) and the Pixhawk1 autopilot that navigated a road course autonomously using dual GPSs and built-in IMUs. I won the Sparkfun AVC Peloton Class back in 2013 with an rc car equipped with an APM.

I suggest that you take a look at the Donkey Car python code if you want to navigate by “learned visual objects”. As I stated in a past post, I have a Rpi 3B+ running the Donkey Car python code and the idea is to train the Car (robot) by driving it visually over a course and then have it drive the course autonomously. The Donkey Car code uses OpenCV and TensorFlow to collect and process the visual images and then Keras to train the car’s software to drive the course autonomously.

For indoor navigation, I still feel that the RPLidar is all that is really needed to navigate a room by creating a room map and then using the map to go from a starting point to a goal end point (SLAM). Using the Pi Camera will give the user a visual view of the robot in operation as it navigates.

Regards,

Tom C

Hi All,

I am installing ROS on my GoPiGo3 which is using a 32GB SD card using this link to the ROS website for the installation of ROS Kinetic on Raspbian Strectch.

The first thing you should do is increase the Raspbian Stretch swap file by using nano to edit the /etc/dphys-swapfile swap file value (CONF_SWAPSIZE) from 100 to 1024 and then back to 100 after you have successfully installed ROS Kinetic.

During the execution of the commands in the “2.1 Setup ROS Repositories” section if you get a “not authenticated” message, then follow the command line instructions on adding a modifier to allow the download of "non-authenticated files.

During the execution of the commands in the “3.3 Building the catkin Workspace” section, I had to add the -j2 option as the default -j4 option hung during the installation of the #45 ros.cpp package.

If you do use the -j2 option, make sure that you continue to use it during any time you rebuild the “workspace” as the rebuild will probably hang again at the ros.cpp package build.

Otherwise the installation instruction worked as indicated.

And don’t forget that you have to install the GoPiGo3 Node.

Regards,

Tom C

Wow Thomas, Thanks.

The things folks do in ROS never ceases to amaze. I just watched unsupervised learning applied to driving a ROS on Raspberry Pi car (based on “eye in the sky” localization).

I have the feeling it might be possible for my GoPiGo3 to self learn to dock using the PiCam image, Distance sensor, odometer inputs with successful charging status as the goal pose based on the the fact that this fellow’s car learned that driving backward can achieve the goal. (My bot has to position accurately facing the dock, then turn 180 and back onto the dock.) BUT this sounds like a serious investigation.

So many avenues (too many) open up with a successful ROS GoPiGo3 install. Good luck, I’m rooting for you.

Thanks for the kudos, much appreciated.

However, I am having an issue with the " Starting with ROS on the GoPiGo3 and the Raspberry Pi" instructions as there seems to be a problem with the CMake command and I am not savvy enough of a programmer to determine how to correct the problem so I have started a new post to ask for help.

Your link to the “Self Driving and Drifting RC Car using Reinforcement Learning…” looks quite similar to Donkey Car.

Regards,

Tom C

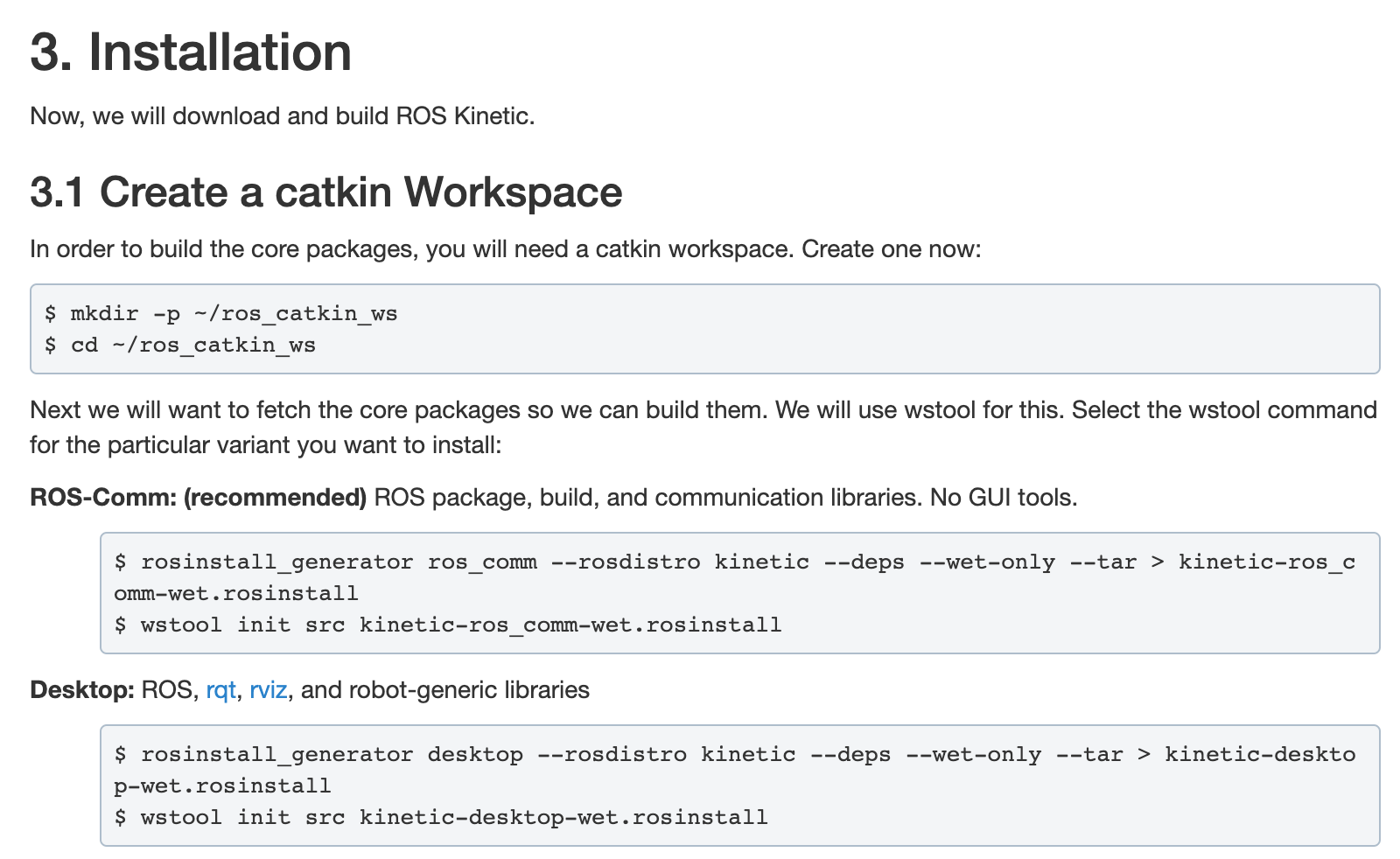

What did you choose to install in section 3.1?

Section 3.1? I used the ROS Wiki instructions to install ROS Kinetic. ROS Melodic is the latest, but the installation of the GoPiGo3Node assumes ROS Kinetic is installed.

Regards,

Tom C

Yes, in the [u]ROS Kinetic on RPi Wiki instructions[/u] section 3.1:

Did you do just ROS-Comm or the whole Desktop, (or some pieces-parts “variant”)?

Did you by any chance run up against:

sudo ./src/catkin/bin/catkin_make_isolated --install -DCMAKE_BUILD_TYPE=Release --install-space /opt/ros/kinetic -j2

Traceback (most recent call last):

File "./src/catkin/bin/catkin_make_isolated", line 12, in <module>

from catkin.builder import build_workspace_isolated

File "./src/catkin/bin/../python/catkin/builder.py", line 66, in <module>

from catkin_pkg.terminal_color import ansi, disable_ANSI_colors, fmt, sanitize

ImportError: No module named terminal_color

To get around this I had to:

copy raw content of https://raw.githubusercontent.com/ros-infrastructure/catkin_pkg/master/src/catkin_pkg/terminal_color.py into

the catkin_pkg

sudo nano /usr/lib/python2.7/dist-packages/catkin_pkg/terminal_color.pyNo, when installing with the -j2 option I did not encounter that problem. The problem that I had was at package #45 ros.cpp when using the default option of -j4.

Also, do not use “Starting with ROS on the GoPiGo3 and the Raspberry Pi” to install the GoPiGo3_Node as it is missing an initial, critical command string and has some other problems.

Use this link to install the ROS GoPiGo3_Node instead.

Regards,

Tom C

This is gory detail of how I successfully installed ROSberryPi Kinetic and the ROS GoPiGo3_Node on Raspbian Stretch. It would have gone on Raspbian For Robots even better (Remote Desktop works better).