Use scp secure copy command but FileZilla is easier

dH Delta horizontal = x1 - x2 (pixel)

dV Delta vertical = y1 - y2

Oh my god… It worked… Thank you so much!

Nice, Filezilla also works  Thanks you both!

Thanks you both!

Since I cannot see what you are doing, I’m not sure what resolution you’re talking about.

If everything else is OK and its the CAMERA or VIDEO that’s taking too much room, you can change that by adjusting the /etc/uv4l/uv4l-raspicam.conf file.

Somewhere between lines 50 and 60, (depending on the version of raspicam and the config file you are using), you will see something that looks like the following:

(this is from the current version of raspicam’s uv4l-raspicam.conf file)

encoding = mjpeg

# width = 640

# height = 480

framerate = 30

#custom-sensor-config = 2

With the width and height lines commented out, the video image is HUGE - filling the entire screen/browser width.

Un-commenting these lines makes the image smaller, but still too big for what I want.

The original version of the config file as shipped with GoPiGo O/S 3.0.1 has this:

encoding = mjpeg

width = 320

height = 240

framerate = 15

#custom-sensor-config = 2

Which produces an image that’s a bit too small for my taste, and a bit blurry, so I increased the size by half, and upped my framerate to 30.

Viz.: (my current settings)

encoding = mjpeg

width = 480

height = 360

framerate = 30

#custom-sensor-config = 2

. . . and that produces an image that is large enough, but not TOO big.

Maybe this will help?

Follow-up note:

In order to maximize the field of view, make sure the settings maintain a 4:3 aspect ratio.

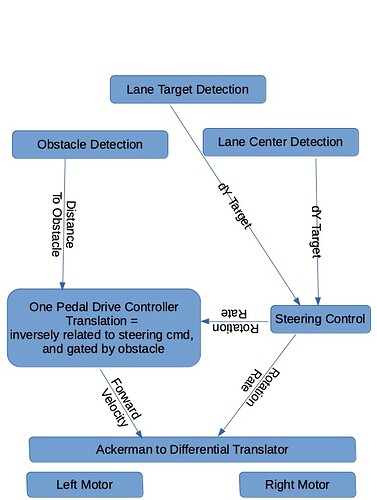

Quick update: I spoke to my professor about the minimum result, that I need to achieve for my thesis. He said that the minimum result is the perception of the environment, which means lane detection and obstacle detection of certain objects. I don’t need to achieve lane keeping, but it would be amazing if I did. So I try to get the lane detection with turns going. After that I will search for a obstacle detection algorithm for cars or stop signs or pedestrians. After that my focus is mainly on writing the thesis and which the time in mind, I can think of improvements of any kind.

Correct me if I am wrong, but (AFAIK) a “stop sign” isn’t an obstacle, right?

A person in the road, or another car, or a cow, or a fallen tree - these are obstacles, but a stop sign (or a traffic light) isn’t an obstacle.

Or, am I missing something here?

Correct a stop sign isn an obstacle. I was just brain storming while writing this  . I need to prove that I can detect certain things basically. It doesn’t really matter what I am trying to detect. The easier to implement the better.

. I need to prove that I can detect certain things basically. It doesn’t really matter what I am trying to detect. The easier to implement the better.

Do you have to use the camera for obstacle detection, or can you use another sensor (e.g. ultrasound)?

/K

Absolutely!

Forgive me for being a bit long-winded, but the topic of engineering/over-engineering reminded me of a story I read in a 1950’s/1960’s era trade magazine for television repairmen of that day.

Yes, I am only using the raspberry pi cam v2 for that.

Did you see my posting on the setup for the raspberry pi camera up above?

https://forum.dexterindustries.com/t/autonomous-driving-gopigo3-bachelor-project/8685/55

There are a few cute settings in the current config file that might be useful - the only caveat is to keep the aspect ratio at 4:3 if you want to use the entire field of view.

Okay that’s nice and realistic anecdote

Yes, I have seen it but haven’t tested it yet! Still… very interesting and great input, thanks!

Is there any chance on finding a data sheet for the motors of the gopigo? I need basic information about them for my thesis and there are no information at all.

Most of the hardware is remarkably well documented on the Dexter Industries GitHub page.

I don’t remember if there is a BOM for the motor assemblies, but they are, essentially, standard robot gear-motors that you can buy anywhere with a magnet wheel and a hall-sensor PCB attached.

The encoders are made of:

- A disk magnet that contains either six poles or 16 poles.

- Two hall sensors located 90° apart so that the robot can sense the direction of rotation.

GitHub is your friend.

Anyone else would end up looking there to answer this question.

Also the have schematics for just about everything there.

What prevents you from referencing the entire robot instead of every individual component?

Alright guys, time for an update… So I was trying to get this lane detection algorithm from github working but there are two main problems. firstly, the raspberry pi 3 is not powerful enough to make this algorithm go in real time and secondly, the positioning of my camera is pretty bad. If the gopigo is at an turn I only “see” the outer lane and not the inner… So I am not really sure if i can make this algorithm be dumber and easier to use or If i should use something else…

Till the histogram it worked quite good… But since starting the lane search everything just failed hard…

This is the current code:

Do you have the camera set up to use the entire field-of-view? Depending on the video mode you set up, it’s really easy to get a much more limited FOV than you want.

Messing with the camera and trying to figure out how to make the latest rpi_camera updates work with GoPiGo O/S, I discovered two things:

- The video mode has to be one that allows the full camera sensor to be used instead of a subset of it.

- You want to set a 4:3 aspect ratio as that appears to be the aspect ratio of the standard raspberry pi camera’s sensor.

You can get fancier cameras with higher resolution and a wider FOV, but I don’t know if you have time for that.

Another option is to get an add-on lens kit, like they sell for smartphones. For example, if you add a fish-eye lens adapter it will increase the FOV, but will add spherical distortion. If you can compensate for spherical distortion in software, that might be a way to do it.

Is your Raspberry Pi throttling because of voltage or heat? @cyclicalobsessive has some nifty scripts that show exactly that kind of problem.

Raspberry Pi-4 boards are not that expensive, comparatively. Assuming that the software can handle it, you want to go with the largest amount of RAM you can get as RAM = speed. Another thought that I just had, make sure you assign enough RAM to the GPU so it can help you.

Also, don’t skimp on the SD card. Spend the extra dinero and get the best and fastest cards you can - A1 rated as a minimum.

Another speed boost is to forgo the SD card and go directly to a small USB SSD drive like the 500GB Seagate Expansion/One-Touch SSD’s, as they blast past SD cards in performance. Here in Russia they are the equivalent of about $80 US. The 1T version is about $120 - $150, but you probably don’t need that much space.

Another tip is to turn on TRIM as that will help the SD card/SSD last longer and run faster. Chances are, if you have a really good, name brand, SD card, TRIM will work right out of the box without any additional setup. If you use a SSD, you will probably have to enable discard/trim in software. You can enable automatic TRIM on the SD card/SSD by starting the fstrim.timer service. You can find more information here:

https://www.jeffgeerling.com/blog/2020/enabling-trim-on-external-ssd-on-raspberry-pi

What say ye?

Very good question… Not really sure… how to check that. All I know is that, I am streaming the video feed with “cv2.VideoCapture(-1)”

Yes, I am using 640x480 as a compromise between image quality and complexity.

Yeah, there is no time to get more hardware. This hardware need to be enough. Everything else needs to be software.

Not sure, but I tested the code and it was extremely slow… Like a couple of seconds till the camera recognized a new scene.

The main problem was and probably is the delivery bottleneck of Pis right now. So I dont think I could get a Pi4, even if I wanted.