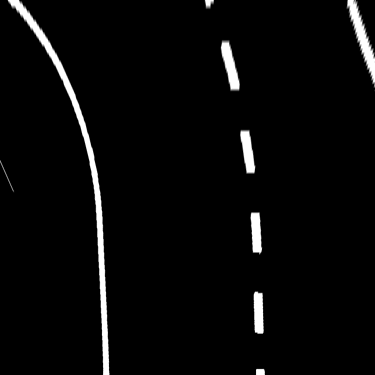

Do you have the camera set up to use the entire field-of-view? Depending on the video mode you set up, it’s really easy to get a much more limited FOV than you want.

Messing with the camera and trying to figure out how to make the latest rpi_camera updates work with GoPiGo O/S, I discovered two things:

- The video mode has to be one that allows the full camera sensor to be used instead of a subset of it.

- You want to set a 4:3 aspect ratio as that appears to be the aspect ratio of the standard raspberry pi camera’s sensor.

You can get fancier cameras with higher resolution and a wider FOV, but I don’t know if you have time for that.

Another option is to get an add-on lens kit, like they sell for smartphones. For example, if you add a fish-eye lens adapter it will increase the FOV, but will add spherical distortion. If you can compensate for spherical distortion in software, that might be a way to do it.

Is your Raspberry Pi throttling because of voltage or heat? @cyclicalobsessive has some nifty scripts that show exactly that kind of problem.

Raspberry Pi-4 boards are not that expensive, comparatively. Assuming that the software can handle it, you want to go with the largest amount of RAM you can get as RAM = speed. Another thought that I just had, make sure you assign enough RAM to the GPU so it can help you.

Also, don’t skimp on the SD card. Spend the extra dinero and get the best and fastest cards you can - A1 rated as a minimum.

Another speed boost is to forgo the SD card and go directly to a small USB SSD drive like the 500GB Seagate Expansion/One-Touch SSD’s, as they blast past SD cards in performance. Here in Russia they are the equivalent of about $80 US. The 1T version is about $120 - $150, but you probably don’t need that much space.

Another tip is to turn on TRIM as that will help the SD card/SSD last longer and run faster. Chances are, if you have a really good, name brand, SD card, TRIM will work right out of the box without any additional setup. If you use a SSD, you will probably have to enable discard/trim in software. You can enable automatic TRIM on the SD card/SSD by starting the fstrim.timer service. You can find more information here:

https://www.jeffgeerling.com/blog/2020/enabling-trim-on-external-ssd-on-raspberry-pi

What say ye?