I got the EAI YDLIDAR X4 on Amazon - only a couple of bucks more than the price on Ali Express (mentioned in the book), with free, fast shipping (I’m already a Prime member).

The ydlidar.com site has been down all weekend. Fortunately I found downloadable files at:

https://www.robotshop.com/en/ydlidar-x4-360-laser-scanner.html#Useful-Links

(side note - I’ve had good luck ordering materials from robotshop.com - I have no financial interests, but I appreciate that they do things like make files available [note to self- buy something there to support them]).

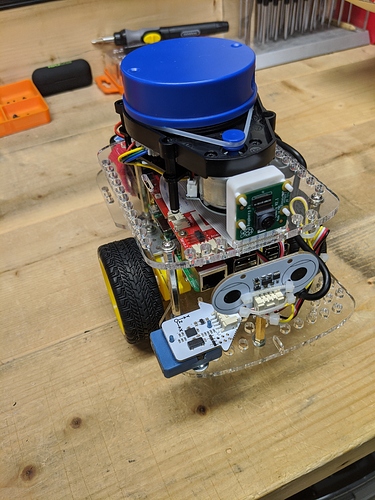

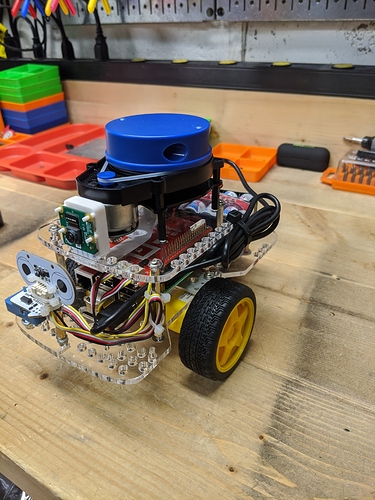

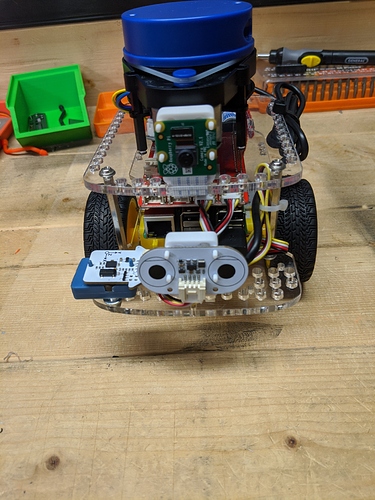

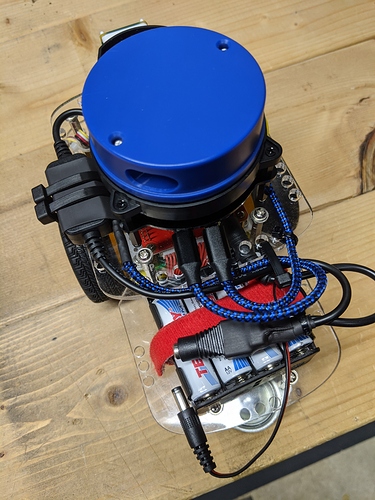

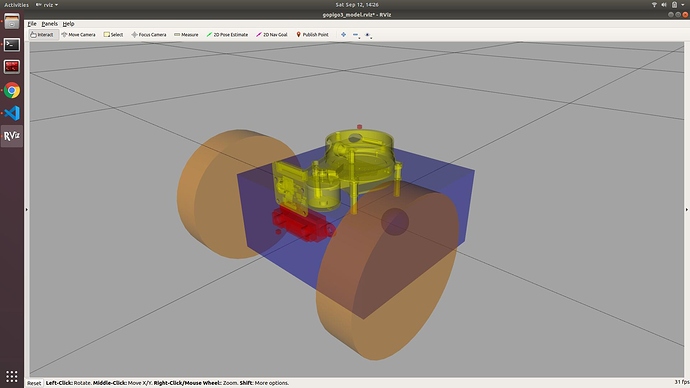

After verifying that the scanner worked with the windows .exe file that I downloaded, I mounted the LDS to Finmark (my robot). This required some re-configuration of the sensors.

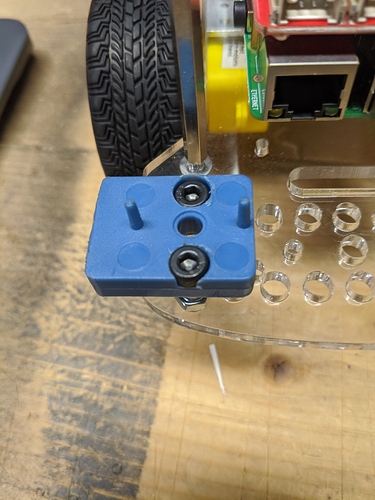

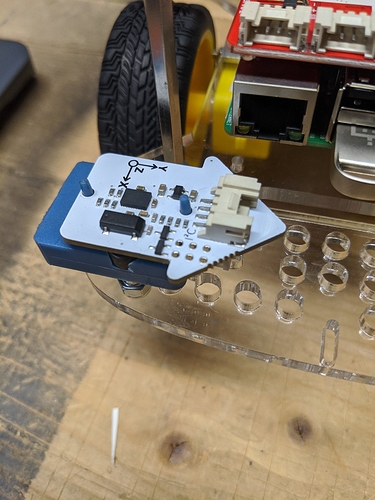

I shifted the IMU to the front deck. I removed the pegs from a sensor mount that I had. Since I only had socket screws in the appropriate size, I drilled out the holes to countersink the sockets.

I would have liked it close to the center of the robot, but think this will be OK.

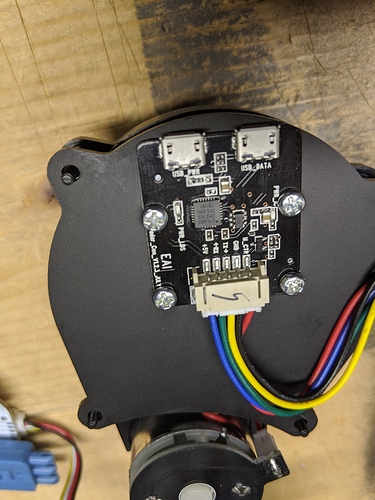

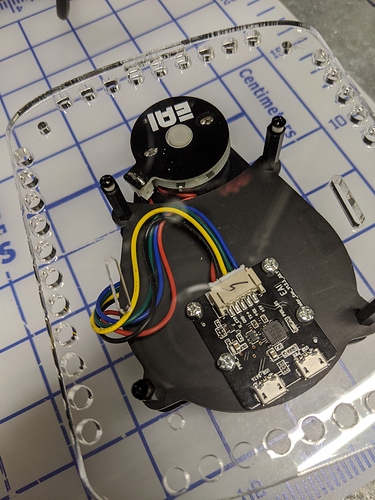

For the YDLIDAR, I drilled through the bottom plate so I could mount the USB adapter (note for drilling plastics - use a slow drill speed, make sure the piece is firmly secured so the drill doesn’t grab it and spin the piece [I speak from experience - just holding it isn’t enough], and put a sacrifice piece of soft wood underneath; go slow).

I had my own bolts and locking nuts - as positioned they don’t touch any of the electronics.

For the YDLIDAR itself I decided to mount it with the intrinsic front facing forward. I just drilled holes through the mounting plate (see note above regarding drilling plastic).

Ended up not quite centered, but I’m hoping it will be OK.

Since the distance sensor seems to be used just looking straight ahead (and since the servo would move a little every time I turned the robot on), I decided to just mount it looking forward. I’ve ordered a mount (could have designed and 3D printed a mount, but too much trouble) - in the meanwhile I just zip tied the sensor to a standoff I had lying around. The USB cable that came with the YDLIDAR is plugged in to the RPi. I might look into a shorter cable for tidiness’ sake.

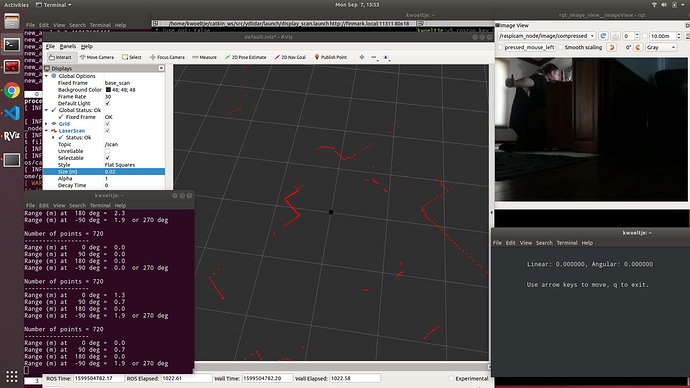

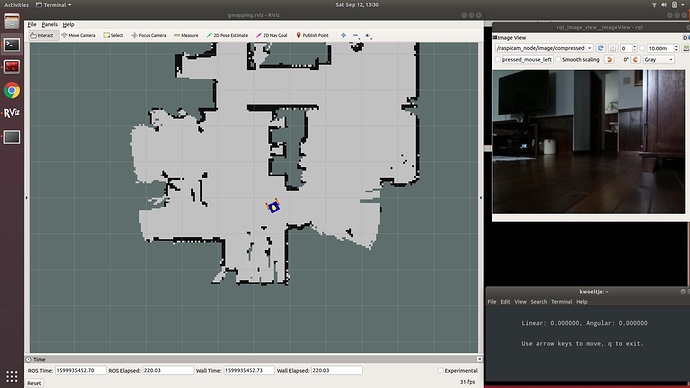

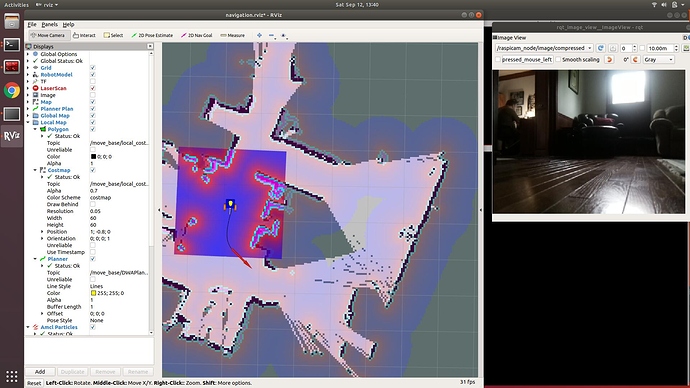

The ROS files seem to be on GitHub, so it shouldn’t matter that the YDLIDAR.com site is down. Next step - installing those files.

/K